As a new developer, I want to ensure that any web apps I build are free of any type of discrimination, including unintentional discrimination. I’m exploring this topic to learn from past f*ck-ups and understand the tools I wield and how I can better use them to shape inclusive user experiences. I’m going to look back at Airbnb and #AirbnbWhileBlack to examine what went wrong and how they’ve worked to uncover and start correcting discrimination and bias on their platform.

I’m extremely passionate about building a more equitable web for everyone. I believe how we build web applications from the ground up matters. When researching for this article and topic, I’ve landed on three topics that I think are essential to think about in any web app: What is a name? How does the Orchestra Curtain fight implicit bias? And do you need data collection?

The History of #AirbnbWhileBlack

Back in July 2015, the hashtag #AirbnbWhileBlack began to circulate after Quirtina Crittenden began sharing her Airbnb rejection experiences on twitter. She tried many times to book Airbnb places that were listed as available, only to be rejected over and over. When she shortened her name to Tina and changed her profile picture to a landscape photo her booking issues magically disappeared. Shouldn’t take a rocket scientist to see the issue here.

Fast forward to Dec 2015: Harvard University researchers demonstrated that racial discrimination among Airbnb hosts was widespread. The report by Benjamin Edelman, Michael Luca, and Dan Svirsky examines that “In particular, revealing too much information may sometimes have adverse effects, including facilitating discrimination.” Check out the abstract:

Online marketplaces increasingly choose to reduce the anonymity of buyers and sellers in order to facilitate trust. We demonstrate that this common market design choice results in an important unintended consequence: racial discrimination. In a field experiment on Airbnb, we find that requests from guests with distinctively African-American names are roughly 16% less likely to be accepted than identical guests with distinctively White names. The difference persists whether the host is African American or White, male or female. The difference also persists whether the host shares the property with the guest or not, and whether the property is cheap or expensive. Discrimination is costly for hosts who indulge in it: hosts who reject African-American guests are able to find a replacement guest only 35% of the time. On the whole, our analysis suggests a need for caution: while information can facilitate transactions, it also facilitates discrimination.

While Airbnb was rightly criticized for a continuing failure to adequately deal with clear discrimination on its platform, they took a significant step forward in 2016. In an effort to fight that discrimination Airbnb asked Laura W. Murphy, a Civil rights advocate and ACLU veteran, to outline a blueprint that Airbnb could follow to create a more inclusive platform.

Three months later, in the report entitled, “Airbnb’s Work to Fight Discrimination and Build Inclusion,” she states the following:

Airbnb is engaging in frank and sustained conversations about bias on its platform. More noteworthily, however, Airbnb is putting in place powerful systemic changes to greatly reduce the opportunity for hosts and guests to engage in conscious or unconscious discriminatory conduct.

The interesting part about using Airbnb as a case study is not just because of its failures but also the platforms actionable changes. Let’s fast forward again to September 2019. Airbnb released a three-year review in which they explained their work to fight discrimination and build inclusion in which Laura W. Murphy was still involved. In the foreword, Laura identifies 5 ways in which Airbnb “has improved its operations in specific ways to thwart bias:”

- The Community Commitment, an explicit pledge to treat everyone with respect, without judgement or bias, is a standing requirement for all existing and new members of the community;

- The company has improved access to listings for people with disabilities by developing new and more effective filters to search for accommodation with appropriate features;

- Airbnb announced in October 2018 that it would no longer display guest profile photos to hosts prior to the acceptance of a booking request by a host;

- In July 2019, Airbnb announced that nearly 70 percent of its accommodations can be booked using Airbnb’s Instant Book, which doesn’t require prior approval from the host of a specific guest. This helps reduce the potential for bias because hosts automatically accept guests who meet objective criteria set out by the hosts; and

- Offensive or discriminatory content in messages can now be more easily flagged and reported by any user.

And these changes are still not the end of Airbnb’s commitment to creating a more inclusive platform. Airbnb’s anti-discrimination team is currently working on measuring the gaps between acceptance rates for guests in two different groups. This begins Project Lighthouse; an initiative launched in the United States to uncover, measure, and overcome discrimination when booking or hosting on Airbnb.

What We Can Learn From Airbnb

When I look back over the past 6 years of Airbnb’s mistakes and work to combat discrimination, I want to think about what I can learn and how I can avoid discrimination from the get-go. I really enjoyed reading the article, “Fixing Discrimination in Online Marketplaces” written by Ray Fisman and Michael Luca (Michael was part of the team that wrote the 2015 article on racial discrimination on Airbnb). I’d highly recommend giving it a read. They discuss four design decisions which I think help highlight the issues we need to be aware of as web developers:

- Are we providing too much information? In many cases, the simplest, most effective change a platform can make is to withhold information such as race and gender until after a transaction has been agreed to.

- Could we further automate the process? Features such as “instant book,” allowing a buyer to sign up for a rental, say, without the seller’s prior approval, can reduce discrimination while increasing convenience.

- Can we make discrimination policies more top-of-mind? Presenting them during the actual transaction process, rather than burying them in fine print, makes them less likely to be broken.

- Should we make our algorithms discrimination-aware? To ensure fairness, designers need to track how race or gender affects the user experience and set explicit objectives.

Clearly here Airbnb was providing too much information prior to the bookings, which while it might have increased trust, it facilitated both explicit and implicit bias on both ends of the transaction. That’s why Airbnb reduced the prominence of profile photos and then in 2018 decided to no longer display guest profile photos prior to the acceptance of a booking. Airbnb also identified that by further automating the booking process through “instant bookings” they could reduce any potential bias. And they made discrimination policies more top-of-mind through the explicit community commitment that all existing and new members of the community are bound.

How We Build Web Applications Matters

When we look at Airbnb’s history, it’s clear that explicit design decisions are needed in today’s online marketplaces and sharing economy. How I build web applications will matter more to my users than I’ll ever understand as an English-speaking white man who’s never had to deal with discrimination or bias. But I’ll always work my hardest to make sure I build the most inclusive web applications possible. So, I challenge every developer to think about the following three questions:

What is a name?

How does a curtain fight implicit bias?

And do you need data collection?

What is a Name?

I’ll never do justice explaining just how wrong you probably think about names. You need to read Patrick McKenzie’s famous 2010 article “Falsehoods Programmers Believe About Names” and then follow that up by taking a look at Tony Roger’s 2018 article “Falsehoods Programmers Believe About Names – With Examples.”

Seriously, I’ll wait…

It’s shocking how easy it is to botch names. And the kicker is your web application is almost always wrong because in the famous words of Patrick Mackenzie, “anything someone tells you is their name is — by definition — an appropriate identifier for them.” And names matter to people. It’s can be your chosen identity, history, or your culture. And it’s just plain you. The W3C has a good section on “Personal names around the world.”

How Does The Orchestra Curtain Fight Implicit Bias?

Let examine the Lesson from Symphony Orchestras discussed in Ray Fisman and Michael Luca’s article “Fixing Discrimination in Online Marketplaces.”

Consider how the challenge of creating diversity in U.S. symphony orchestras was met. In the mid-1960s, less than 10% of the musicians in the “big five” U.S. orchestras (Boston, Philadelphia, Chicago, New York, and Cleveland) were women. In the 1970s and 1980s, as part of a broader diversity initiative, the groups changed their audition procedures to eliminate potential bias. Instead of conducting auditions face-to-face, they seated musicians behind a screen or other divider. In a landmark 2000 study, economists Claudia Goldin and Cecilia Rouse found that the screen increased the success rate of female musicians by 160%. In fact, they attributed roughly a quarter of the orchestras’ increased gender diversity to this simple change. And with selection based more squarely on musical ability, the orchestras were undoubtedly better off.

We as developer’s have the same ability to construct an orchestra screen. How and when we present racial identities, disabilities, genders, ages, and more plainly just all user data matters. Airbnb’s recent removal of the profile photos prior to a booking and the push to increase the number of instant book listings are two examples of developers enacting curtains. When we remove the user’s identity prior to an agreement or transaction we can significantly reduce the prevalence of explicit and implicit bias.

Do You Need Data Collection?

I want to take this a step further and propose to you – do you need data collection? It’s a strange question. Let’s start from trust. It’s a lofty proposition that sounds good on its face. But really what is trust? In today’s sharing economy and online marketplaces, you don’t know the other party. Building trust would signify a developing relationship and say mutual understanding. When user identity is tied to trust – you end up with judgements based on appearance, perceived race, religion, gender identity, or anything else perceived about a person. And this means your users are significantly hurt by discrimination and bias.

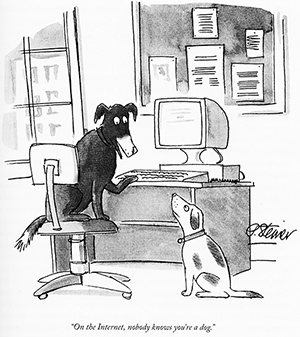

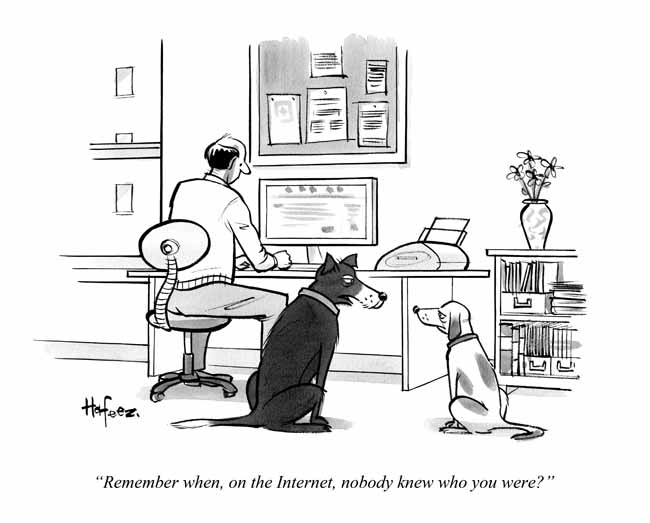

Think back to the early days of the web where anonymity was key. If there is no significant purpose to user data collection and metrics, then why would you possibly risk inadvertently hurting your users.

I know it’s not that cut and dry. Airbnb has some necessity to collect user identities to fight fraud or damages to rentals, but the vast majority of front facing user data doesn’t seem to have a net positive purpose for users in sharing economies and online marketplaces. The time of anonymity is dead.

Data collection is a tough subject, often greatly overshadowed by an insatiable desire to amass incredible databases of user information for marketing and sales. But there is a slight upside to data collection, when it’s absolutely necessary. Like Project Lighthouse, you’re able to uncover, measure, and ultimately overcome discrimination. You’re able to monitor user demographics for greater trends that might signify discrimination or bias.

But all this leads me to wonder: If all Airbnb rentals were instant bookings, like a hotel chain, where no user data reaches the host prior to booking then would they have reached a point where they needed Project Lighthouse to track systemic discrimination by hosts? Possibly. I’m absolutely sure discrimination exists after a booking is confirmed and that still needs to be addressed.

Building A User Interface That Shines

When designing and building web applications it’s incredibly important to think about discrimination and bias from the get go. Making our web applications inclusive isn’t just about rooting out discrimination and bias. We also need to think about accessibility. Checkout the W3C Web Content Accessibility Guidelines which help “make content accessible to a wider range of people with disabilities, including blindness and low vision, deafness and hearing loss, learning disabilities, cognitive limitations, limited movement, speech disabilities, photosensitivity and combinations of these.”

I’m going to leave you with the Four Principles of Accessibility and I’ll cover this topic in more detail in the future.

- Perceivable – Information and user interface components must be presentable to users in ways they can perceive.

- This means that users must be able to perceive the information being presented (it can’t be invisible to all of their senses)

- Operable – User interface components and navigation must be operable.

- This means that users must be able to operate the interface (the interface cannot require interaction that a user cannot perform)

- Understandable – Information and the operation of user interface must be understandable.

- This means that users must be able to understand the information as well as the operation of the user interface (the content or operation cannot be beyond their understanding)

- Robust – Content must be robust enough that it can be interpreted reliably by a wide variety of user agents, including assistive technologies.

- This means that users must be able to access the content as technologies advance (as technologies and user agents evolve, the content should remain accessible)

What other ways are you addressing discrimination in your web applications? I’d love to hear from you!